Limits on the Cost of Nuclear Energy

No - it's not "too cheap to meter." But it also shouldn't be prohibitively expensive to construct. Estimating nuclear energy costs compared to other energy-generating assets is challenging and necessary to make financing decisions. We just have to be aware as to who has their fingers on the spreadsheet. Is it a wind and solar zealot, a fossil profiteer, or a nuclear startup? The following is a little exercise to see what the cost limits might be for nuclear energy.

Levelized Cost of Energy

There are many reports on the Levelized Cost of Energy from different energy harvesting assets. The LCOE data most widely available and cited by researchers is probably Lazard [1]. It has high Google performance and the reports are free, simple and readable. But we should keep in mind that Lazard is a financial asset company with US$220.9 billion under management as of March 2022, and they are fully engaged in sustainability and ESG cheerleading for their "green" investment portfolio. They "invest in enterprises that financially prosper while protecting and preserving human and natural capital."

Because not all energy sources are dispatch-capable, LCOE is just a piece of the puzzle in deciding how to build a low cost grid that still meets demand most of the time. Building a fully solar grid simply because it has the lowest LCOE would result in a pre-industrial society that can only operate during the sunny daytime. The utility must have sufficient dispatch power capacity and energy storage to meet demand on a windless and cloudy week. That said, LCOE is easy to think about, and there's no question that an on-demand power source with the lowest LCOE and zero-carbon emissions would be the ideal choice for new grid capacity.

There are various LCOE estimation methods [2] that take into consideration the operating, fuel costs and capital costs over the lifetime of the project. I've not seen studies that analyze the predicted and actual LCOEs after a project reaches end of life. And the LCOE estimates make significant assumptions regarding the lifetime of a project, fuel costs, interest rates, tax rates, and inflation rates. Different energy assets are more or less sensitive to different rates and assumptions, and wrong assumptions can completely invalidate comparisons. The uncertainties on these inputs are large and suggest that LCOE can be misleading. Most researchers and financial analysts do not estimate LCOE uncertainties, which would probably end up similarly erroneous. For example, wind and solar LCOEs often overestimate project lifetime and power output, leave out decommissioning costs, and leave out transmission infrastructure improvements required to sell the power from those distributed power sources. This is on top of the energy storage and partially idle on-demand power capacity that is required to prop up intermittent power sources. LCOE simply does not include the true cost to incorporate the energy asset in a functional grid that delivers power on-demand.

![2. Minimum LCOEs around the USA. "Minimum cost technology for each county, including externalities (air emissions and CO2 ), restrictions via assumed availability zones, and

reference case assumptions for capital and fuel costs. Numbers in legend refer to the number of counties in which that technology is the

lowest cost."[1a]](/assets/images/min-LCOE-regions-3aa2f6097bea4480a7515de204eaf120.png)

Lower Bounds for Near-Term Fission

Many reports show nuclear fission power plants constructed today are not competitive on LCOE in most US regions, even when including the energy storage costs [1,3] required for wind and solar. I spend some time thinking about how these nuclear LCOEs can be lowered. The impetus, of course, is the extreme energy density of nuclear fuels which, at first blush, would suggest that it should take much less effort (energy, resources, human time, cost) to extract and use nuclear heat compared to other energy sources that require huge quantities of chemical fuel or extremely large amounts of material and land.

There are many ideas and philosophies about how to improve nuclear energy's costs, be it new reactor designs, new fuels, factory manufacturing, additive manufacturing, deregulation, autonomous operations, blah blah blah.

Build it like a natural gas plant

Fission (CCNG basis)

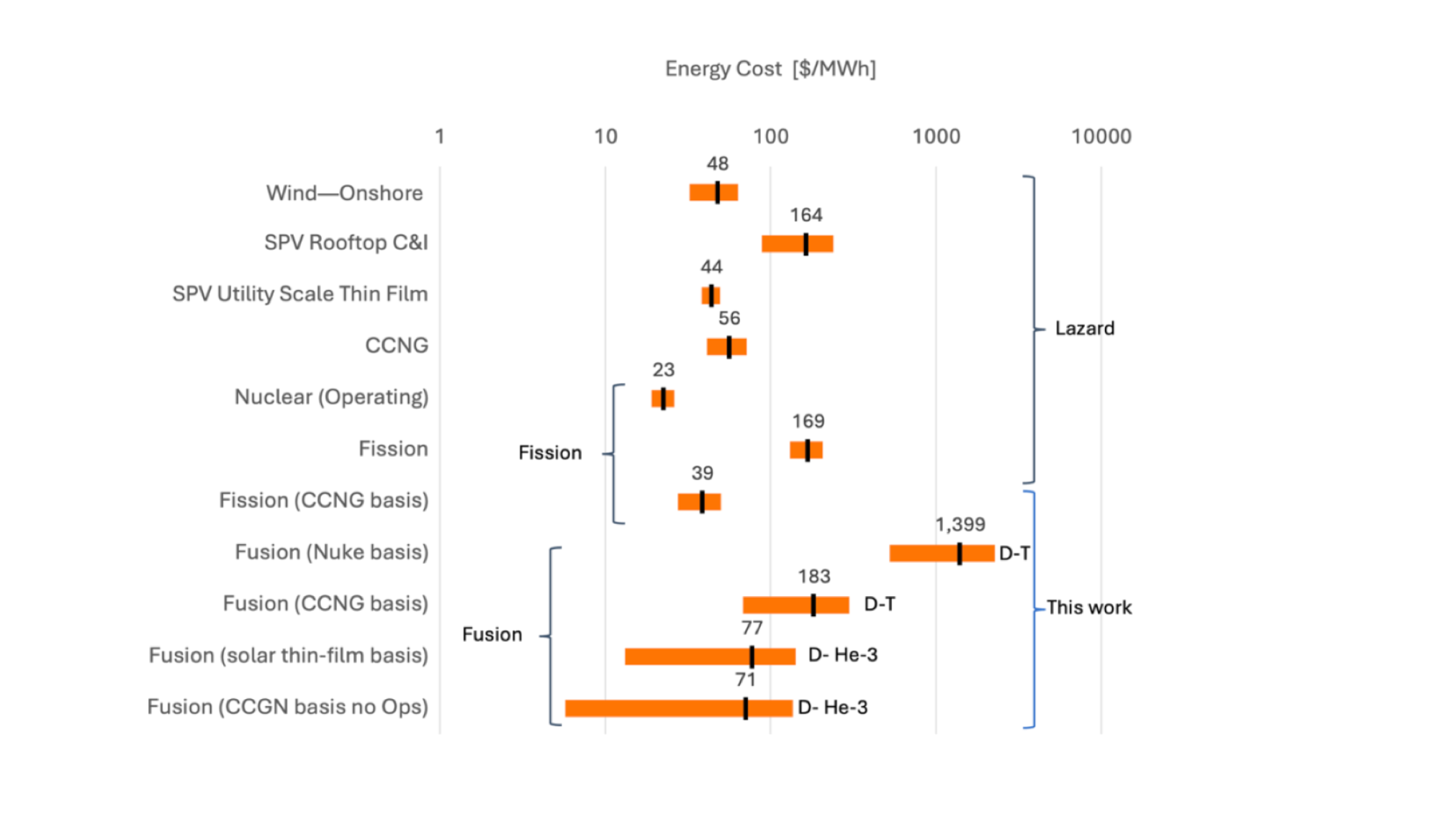

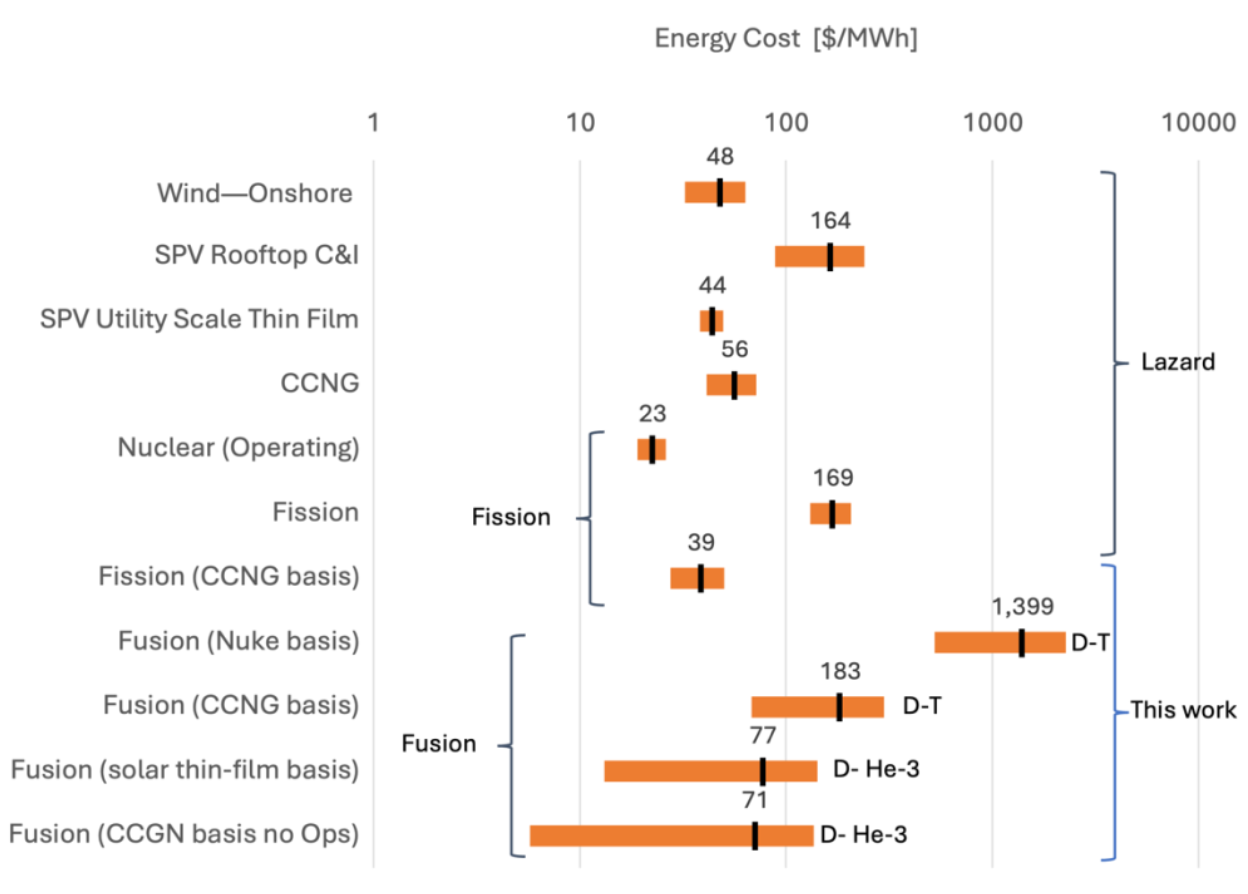

To bound the problem a little, I thought about what the lowest nuclear LCOE could be in the near term. What is a rough lower limit for electricity costs derived from nuclear fission? I bypassed the complex and uncertain arguments about hypothetical nuclear energy systems and looked at existing power generating technologies to come up with a simple cost comparison using substitution.

The simple comparison is made using Lazard’s 2021 data (which still had pre-Brandon fossil fuel prices) and is based on the idea that there is no good reason for combined cycle natural gas (CCNG) and nuclear plants to be built or operated at significantly different cost. There are roughly the same number of systems and parts in both. If anything, CCNG should have higher operating costs due to its higher temperatures and more corrosive substances. Similarly, CCNG operating costs should be higher because refueling happens continuously with large volume and mass flows compared to nuclear refueling which occurs every 2 years in the legacy fleet, and 10 years or longer in many new designs. CCNG are also often operated as load-following plants with rapid power and thermal cycling which requires higher quality parts that will fail faster. CCNG fuel costs are obviously higher and more volatile than nuclear costs.

I took the cost inputs for CCNG, and substituted CCNG's fuel, thermal efficiency, and capacity factors with those from a nuclear power plant. I used CCNG's 20-year project lifetime as opposed to the 40 years used in Lazard for new nuclear. Both CCNG and nuclear are turbo-machinery-based energy harvesting systems, but by using CCNG's inputs, we eliminate "nuclear" penalties like ridiculously large nuclear cost escalation factors, indirect costs, ridiculous operating schemes, and regulatory burden. We get rid of the fluff. Compared to CCNG, the fuel costs are reduced and the thermal efficiency is decreased. The cost of storing the spent nuclear waste is built into the fuel cost by law.

When nuclear power plants are built and operated like natural gas plants, we get the LCOE in Figure 1 labeled 'Nuclear [CCNG basis]'. This Nuclear LCOE is 2x lower compared to natural gas and 7x lower compared to Lazard's Nuclear LCOE estimates. On this basis, nuclear fission has the potential to provide on-demand energy at the lowest cost.

What about the radiation?

You might think we have to build and operate a nuclear plant in a completely different way than a natural gas plant to account for the radiation risk posed by nuclear fuel. But you'd be wrong. That is probably the case for many reactor designs - systems with too high a power density, many interacting components and chemicals, and an annoying proclivity to melt or damage themselves. These reactors are high-performance systems running on a knife-edge and requiring containment and safety systems to prevent fission products from being released to the environment. It's like a Ferrari racing through tight turns in a long race. It has lots of kinetic energy and chemical energy in its tanks that has to be carefully controlled and dissipated to avoid disaster. Everyone is on edge. The driver has to be hyper aware of his speeds, turning rates, and the machine's health; the pit crew carefully maintains all the parts before and during the race, praying that the brakes don't give out and that cooling systems remain functional; and when things inevitably fail, the racetrack owners have built massive barriers to contain the speeding car, with ambulances and firetrucks standing by. A lot of effort goes into preventing the vehicle controls from failing, and reducing the damage when they do.

Taking the same approach for a nuclear reactor is expensive. But nuclear reactors don't have to be built and operated like Ferraris on a racetrack. Instead, they can be built like Corollas and driven in a giant parking lot – slow, simple, reliable, and cheap. In fact, for some designs out there, the Corolla metaphor doesn't go far enough. The lowest power density (and power per surface area) reactor now under development is the Ultra Safe MMR. With extremely low power and high thermal mass, the reactor can't damage itself during accidents, and it can easily dissipate excess heat without safety systems or active components. In terms of performance and risk, this thing is more like a golf cart than a Corolla - it's slow and not very exciting - but it still gets the job done and at a low cost. This is how radiation risk is decoupled from reactor design, and it's why I believe nuclear fission plants can be built and operated like natural gas plants.

Another consideration is that nuclear plants have been over-hardened and over-regulated for decades. The cost-benefit of catastrophic nuclear accidents does not indicate that radiation is an important cost factor, even during extreme accidents like Chernobyl or Fukushima. Indeed, most of the damage comes from the government and industry response following the accident rather than any direct or inferred radiation deaths. This damage would occur with or without safety and containment systems or nuclear cost escalations.

I'm solidly in the "As Low As Reasonably Achievable" (ALARA) camp. That said, it is likely that radiological dose limits have been arbitrarily chosen and have placed undue financial burden on nuclear power plants. In energy-starved futures, radiological considerations, which are often too small to be measured, may well be sidelined.

Rough Estimates for Long-Term Fusion Systems

Given recent fusion concept investments, it is of interest to repeat the cost exercise for a fusion system in 4 cases that match some of the system attributes advertised by fusion proponents. The first case is the most relevant for current and near-term prototypes like ITER, SPARC, and NIF. ITER and SPARC are tokamak devices using the D-T reaction achieved with magnetic fields to confine plasmas in a torus. NIF is a D-T laser-based inertial confinement fusion experiment taking place at Lawrence Livermore National Laboratory. The last 3 cases concern simpler systems that use aneutronic reactions or simplified technologies that are more challenging to achieve but portend a promising fusion future.

- Big fusion systems w/ current nuclear cost basis

- Big fusion systems w/ CCNG cost basis

- Direct energy conversion fusion w/ solar cost basis

- Direct energy conversion fusion w/ half-price CCNG cost basis

The first case is the most relevant for current and near-term comparisons like ITER, SPARC, and NIF. The last 3 cases describe systems that are more remote but portend a promising fusion future.

Conventional Fusion

1. Fusion (Nuke basis)

First, I assumed that fusion systems would have similar attributes to current nuclear projects: big, complex, but with low or zero fuel cost - ostensibly a big benefit for fusion. This is representative of many of the devices under development like ITER, SPARC and ARC, NIF-derived systems, and various compression target systems. This may even be unfair to normal fission reactors, as these fusion reactors are bulkier, introduce many new complex and expensive systems to start and sustain fusion reactions, have 1.5x-2x parasitic power losses, and require on-site fuel reprocessing to feed the reactor. Just look at the ITER power plant layout where most of the area is covered by systems that don't exist for fission systems like fuel reprocessing and control power systems.

I only penalized the fusion plant with the expected reduced capacity factors, shorter lifetime, and parasitic power losses. Capital costs are, unrealistically, the same as a fission power plant. The operating costs for a fusion system will be even greater because there are more numerous and more complex systems to maintain and because radiation damage will be 5-30x greater per unit of thermal energy. They are getting hammered, and this requires better materials, more shielding, more replacements, more inspection and maintenance. And the real kicker is the low capacity because of how often a fusion reactor has to be shut down for maintenance and repairs. Decommissioning costs can also be expected to be higher for fusion because they produce 50-100x the radiological waste volume per net energy produced. I used an operating cost factor of 1 and 5 for the lower and upper bound estimates.

This set of assumptions results in a roughly 25x higher cost of energy for fusion devices than for CCNG today.

2. Fusion CCNG basis

Now, what if the fusion reactors turn out to be really easy to build and require very few "nuclear" cost escalations? I redid the calculation but used a CCNG cost basis characterized by the low capital and operating costs of a non-nuclear thermal conversion system. It's not a realistic assumption because a fusion power plant of any kind is far more complex and capital intensive by physical requirement than either CCNG or a normal fission power plant. There is not a clear path to size and system reduction or overall simplification, as is the case for some fission designs, as discussed earlier. I gave the fusion system the same low operating costs as a CCNG plant, but again, it's difficult to imagine why this would be. I still punished the fusion system with low capacity factor, shorter lifetime, and parasitic power losses.

In this case, fusion systems would produce electrical power that is 3x higher cost than CCNG. The upper and lower bounds represent the upper and lower estimates on the various parameters.

We can be more optimistic on the lifetimes, parasitic power losses, but these back-of-the-envelope estimates suggest a challenging LCOE hurdle for the possibility of commercial fusion systems in the next several decades. Something has to change.

What about direct energy conversion?

3. Fusion with Solar or 4. CCNG basis

The benefits of direct energy conversion may have motivated a half-billion dollar investment in Helion Energy. The concept uses the D-He3 fusion reaction in field-reversed configuration which is lower in neutronicity than D-T Fusion, but still 2-5x higher than uranium fission reactions. Higher neutronicity means more radiation damage and more maintenance. He-3 is rare on Earth, but Helion believes they can economically produce it by fusing deuterium in a plasma accelerator. To address the high capital and operating costs, Helion intends to generate electricity without turbines, eliminating or reducing those costs of conventional power plants.

Our device directly recaptures electricity; it does not use heat to create steam to turn a turbine, nor does it require the immense energy input of cryogenic superconducting magnets. Our technical approach reduces efficiency loss, which is key to our ability to commercialize electricity from fusion at very low costs.

Solid-state power conversion is not limited to Helion's flavor of nuclear fusion. Fission systems can also generate power with solid-state methods. Thermoelectrics [4], thermionics [5], and thermophotovoltaics [6] all generate electricity from temperature differences with varying efficiencies and challenges. There are also methods to generate power using magnetohydrodynamic generators [7]. There was even a US project for Direct Energy Conversion from Fission Reactors [8] aiming to convert the kinetic energy of fission fragments directly into electrical energy. But for many reasons, including accumulated investment and technology momentum and the sheer difficulty of implementation, the cheapest way to generate electricity from thermal flows is still through a Carnot cycle with turbines or steam cycles.

Helion's system appears simple and elegant with high efficiencies - I can't wait to find out more. For now, let's take them at their word and assume all the theory adds up and the practical and regulatory challenges are resolved - what could the cost of energy eventually be? Helion provides an answer:

We estimate that Helion’s fusion power will be one of the lowest cost sources of electricity. Helion’s cost of electricity production is projected to be $0.01 per kWh without assuming any economies of scale from mass production, carbon credits, or government incentives.

There are four main components of electricity cost: 1) Capital cost 2) Operating cost 3) Up-time 4) Fuel cost.

Helion’s fusion powerplant is projected to have negligible fuel cost, low operating cost, high up-time and competitive capital cost. Our machines require a much lower cost on capital equipment because we can do fusion so efficiently and don’t require large steam turbines, cooling towers, or other plant requirements of traditional fusion approaches

Without thermal power conversion, Helion's system would be a lot more like a wind turbine or solar panel than a conventional power plant, but with more significant control, thermal, radiation, and corrosion challenges that require more expensive hardware and maintenance. It could be a solar panel or wind turbine running at 100% capacity at all times, or a half-price CCNG plant with no operation or fuel cost, and these would be in the range of 0.01 to 0.015 $/kWh. This is a truly Promethean objective, to be applauded and supported. That is the best case.

I do suspect this is a too-good-to-be-true-yet situation, and that the Helion engineers are still in the early stages of development, unaware of myriad complications surrounding the capital costs, fuel cycle issues, corrosion issues, radiation damage effects and shielding, waste issues, power density issues, and accidents - complications that cannot be addressed or cost-modeled until some intermediate problems and prototypes are solved. These unknown unknowns may have negative cost effects, and this is reflected in the upper bounds of the estimates. There's also the matter of D-T fusion systems required to support a D-He3 fuel cycle.

Conclusion

These LCOE estimates for future systems are a bit messy and imprecise. It's probably best to just attempt various approaches and see what happens, resolving the cost questions and comparisons through implementation. However, there are limited technical and financial resources, which creates an opportunity cost for each attempt. Indeed, we might end up investing too heavily in ultimately expensive sources and cripple our ability to deploy ultimately cheap energy sources. Let's hope that expensive energy sources are allowed to flounder and cheap ones are able to flourish.

![Lazard 2021 LCOE data [^1] for CCNG and Fission, and extrapolation to Fission (CCNG basis) through various assumptions.](/img/nuclear_edu/lcoe/Screenshot-2024-02-07-at-2.54.32-PM.png)

[1] Lazard [link](https://www.lazard.com/perspective/levelized-cost-of-energy-levelized-cost-of-storage-and -levelized-cost-of-hydrogen/)

[1a] The Full Cost of Electricity (FCe-)

[2] Foster, LCOE models: A comparison of the theoretical frameworks and key assumptions, 2014 link

[3] Ziegler et al., Storage Requirements and Costs of Shaping Renewable Energy Toward Grid Decarbonization.

[4] U. S. Lee, Young (JPL, California Inst. of Tech., Pasadena, CA and B. Bairstow, “Radioisotope Power Systems Reference Book for Mission Designers and Planners Radioisotope Power System Program Office,” no. September, p. 96, 2015, [Online]. Available: http://ntrs.nasa.gov/search.jsp?R=20160001769&hterms=emmrtg&qs=N=0&Ntk=All&Ntt=emmrtg&Ntx=mode matchallpartial&Nm=123%7CCollection%7CNASA STI%7C%7C17%7CCollection%7CNACA.

[5] S. S. Voss, “TOPAZ II SYSTEM DESCRIPTION.”

[6] A. LaPotin et al., “Thermophotovoltaic Efficiency of 40%,” vol. 604, no. April, 2021, doi: 10. 1038/s41586-022-04473-y.

[7] A. Krishnan and B. S. Jinshah, “Magneto Hydrodynamic Power Generation,” Int. J. Sci. Res. Publ., vol. 3, no. 6, pp. 1–11, 2013.

[8] A. Report and F. O. R. The, “Direct Energy Conversion Fission Reactor Annual Report for the Period,” no. January, 2002.