Ambient Light Removal Using Patterned Illumination

A common problem in image capture for various active illumination methods is ambient light. Say you want to probe a scene using your own light source. You might be using patterned illumination to do 3d reconstruction or using a light pulse to measure distance. You blast the scene without your light source and use an image sensor to capture the interaction of your light source with the scene. There are many techniques that use active illumination and capture. But ambient light which covers a broad spectrum of wavelength will contaminate the captured light and ruin your methods. You have to actively mitigate the ambient or global contamination, which was one of the things I tackled at TetraVue. This can be achieved through various means:

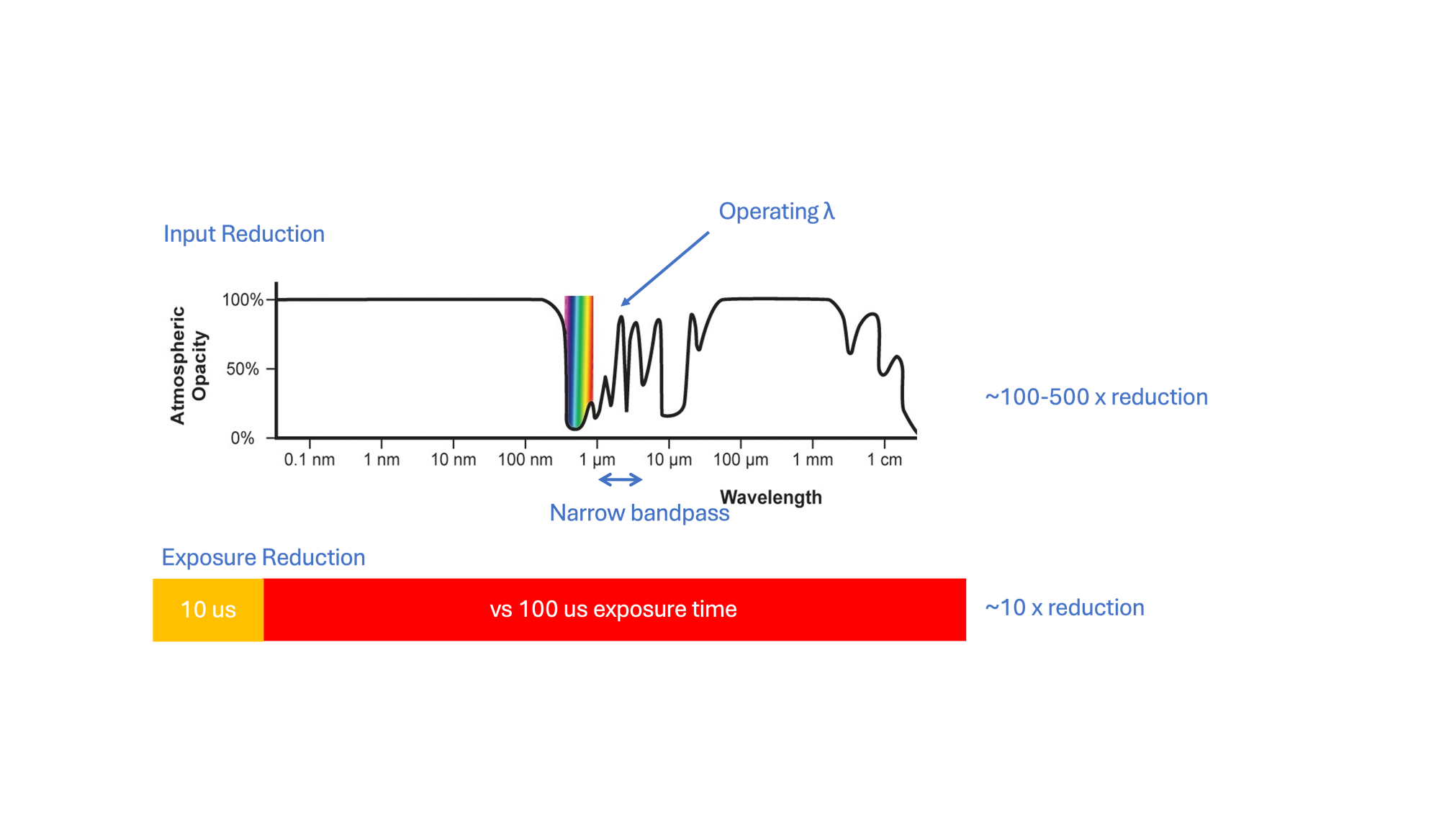

- Bandpass. Tight wavelength light source and tight bandpass filter for capture will throw out most of the ambient light.

- Careful choice of wavelengths or other particle properties. Choose wavelength that have especially low ambient sources (e.g. atmospheric absorption lines in the NIR). Also pay attention to polarization.

- Synchronized, pulsed illumination and capture. Reduce the illumination and capture time as much as possible

- Make your technique ambient invariant.

- Measure the ambient contamination and remove it.

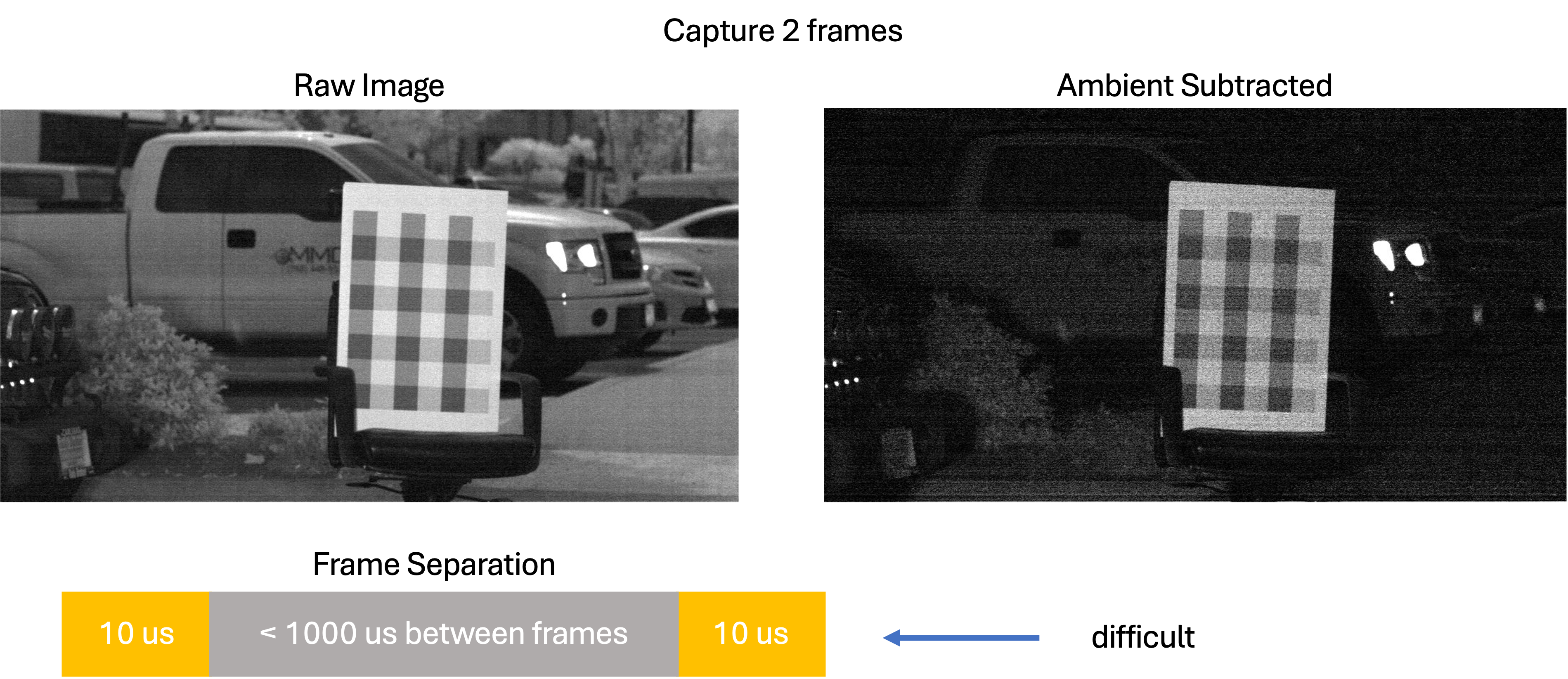

For a 2d image sensor, there are various ways to estimate the ambient component. You can take back to back measurements, one with and one without the user illumination. The difference is the ambient component. But there is temporal mismatch and this may not work well if the scene is changing or if the signal to noise is very low.

If you require better temporal synchronization, you could use a second sensor that samples the same light bundle, and with an exposure adjacent to the main sensor's exposure. This can be achieved using a beam splitter, bandpass patterned pixels (like an RGB) or very close apertures. It is the usual challenge of multiplexing data on the receiver, either using multiple sensors, multiple pixels, or multiple exposures. (see ) Or if exact sync is needed and the light source is sufficiently monochromatic, you could use a tight adjacent bandpass filter (750-752 vs 748 - 750).

At TetraVue, I came up with another way that is temporally synchronous.1 This method estimates the ambient contamination by finding the global component of the illumination. It doubles as a correction to multipath light, another challenge to overcome in depth sensing systems. Multipath light is signal light that scatters between objects in the scene and back to the camera and erroneously weighs depth information. Multipath will bloat corners of reflective walls. The multipath component cannot be easily differentiated from the ambient light.

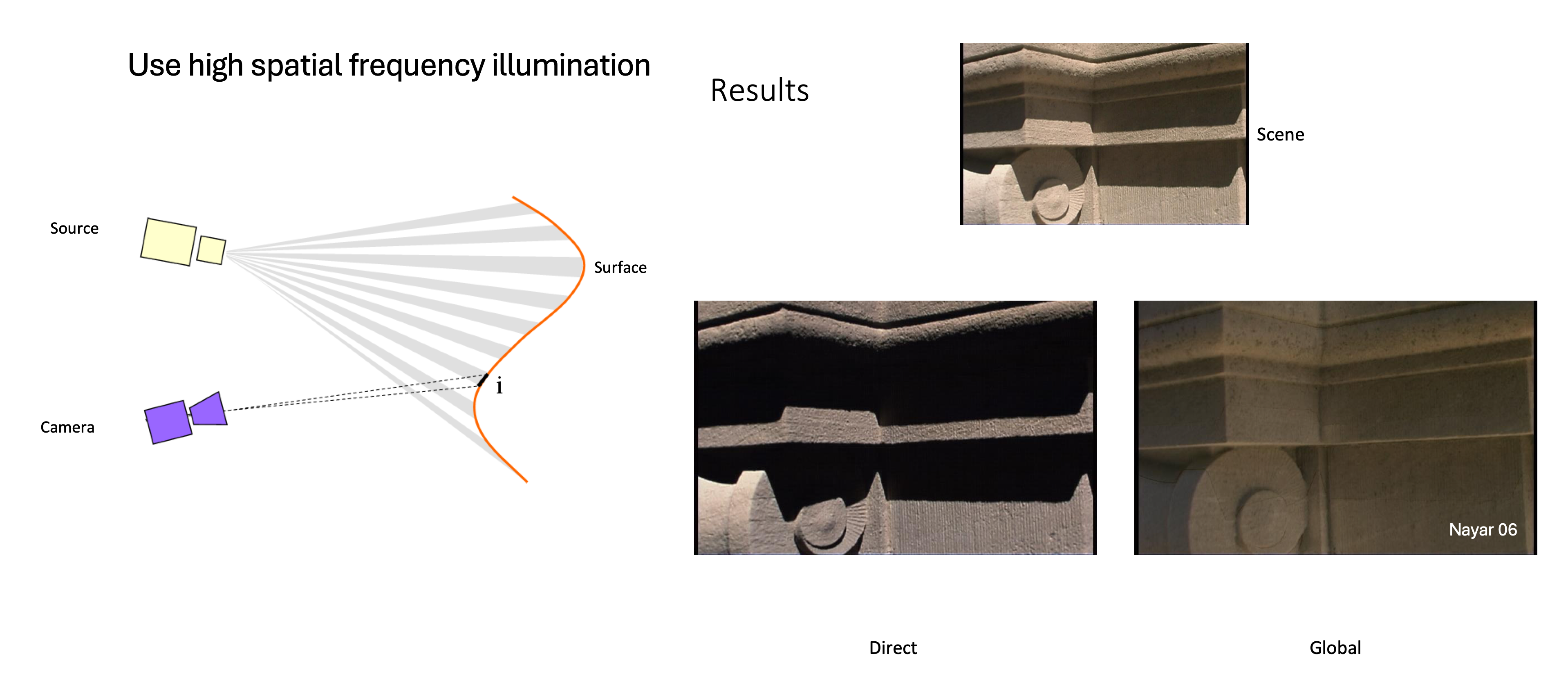

We aim to correct or remove multipath error by separating the total measured light into direct and global illumination components, those being the single path and multipath signals, respectively. The most well known lighting based techniques were first expounded by [Nay06] and usually involve multiple images. The single image techniques involve patterned illumination, such as high contrast dark and bright stripes or dark and bright speckle patterns with about twice the angular spacing as the pixels, to reconstruct a lower resolution version of the direct and global components.

This can be considered multiplexing on the illumination side. The illumination pattern must have the same FOV as the imaging assembly in order for the stripes to have a constant angular width with distance. The dark and bright points in the scene serve as control and test points respectively. The bright test points measure the entire signal, both the direct and the global illumination. The dark control spots measure only the global component. It is assumed that nearby areas have the same behavior, and so the global component in the dark can be subtracted from the bright spot to find the direct component, which necessitates some loss in resolution. In practice, we take into consideration the contrast and fraction of the scene illuminated.

Ambient light falls into the global component, and so high frequency pattern illumination offers another method for ambient subtraction that is simultaneous. The first version of patterned illumination for direct lighting estimation has a resolution loss. [Sub17] outlines a method that maintains resolution and has consistently lower RMSE. The concept is the same, expect the analysis occurs in frequency space. When illuminating a scene with a high frequency pattern, the direct component of the light preserves the high frequencies of the illumination. The global and so ambient light component is predominantly of low frequency makeup, regardless of the illuminating frequency. This difference in the frequency domain allows the separation of the two components using basis representation.

There are other ways to estimate ambient light when the ambient and active illumination light have different temporal behavior which is discussed in more detail here. The ambient light could be constant during the exposure while the active illumination could be pulsed and even temporally sliced. This information can be used to separate the two components but can run into signal to noise challenges.